Create a Video Conference Recorder using WebRTC, MediaDevices, and MediaRecorder.

One of the most exciting things about the current web development landscape is the ability to utilize ‘hot off the press’ browser APIs to create native web apps with functionalities that once required tinkering with Flash plugins and/or force users to upgrade their version of Java. Here at Forio, we choose browser native features and open sourced technologies over what is proprietary whenever possible.

One of our current projects is a training simulation in which users work together to improve their counseling skills. In this project, users will role-play counselor/clients over the web, the video of which will be uploaded to a server so users can provide constructive feedback to each other after. Inspired by this project, today I will present a quick tutorial that uses Firefox’s MediaDevices, MediaRecorder, and WebRTC APIs, along with a Node server on the backend and the video processing tool FFmpeg to create a video conference recorder app, showcasing how far browsers have come since early days of the web. While currently only Firefox supports all three web APIs, Google Chrome supports two and will unveil support for the MediaRecorder API early next year, and with the age of auto-updating browsers upon us, it is my hope that in the near future all major browsers will run the demo smoothly.

Before we get started, you can find the repo with all the code used for the tutorial here.

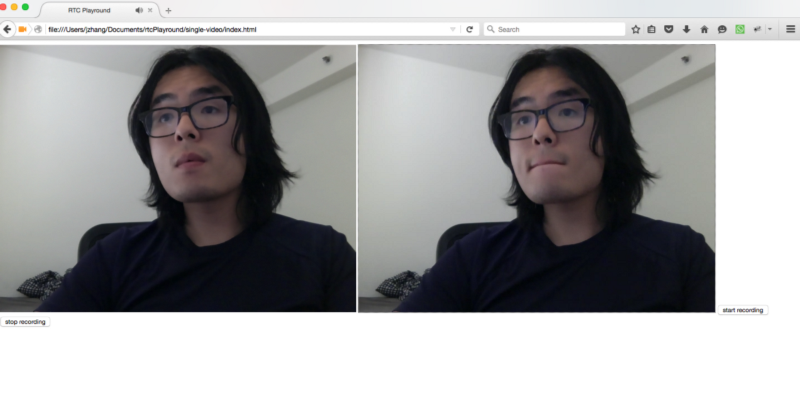

Create a Single-Page Video Recorder

First, let’s create a simple site that records users’ videos and plays them back. You can find the tutorial code in the singe-video directory of the repo.

MediaRecorder.getUserMedia API

One of the coolest things about the modern browser is its support for hardware device access, and getting a stream of user audio/video data is as simple as the following snippet:

navigator.mediaDevices.getUserMedia({

audio: true,

video: true

})

.then(function (stream) {

videoStream = stream;

document.getElementById(‘video’).setAttribute(‘src’, window.URL.createObjectURL(stream));

})

Window.MediaRecorder

Once we have the media stream in place, we can create a new MediaRecorder object that takes the media stream and an optional parameter object that contains output parameters.

window.recorder = new MediaRecorder(videoStream, {

mimeType: ‘video/webm’

});

Next, set up the recorder’s ondataavaiable callback to handle the video data (blob typed) that it returns, start the recorder, and we are in business.

window.recorder.ondataavailable = videoDataHandler;

window.recorder.start()

Now all we have to do is stop the recorder when we are done recording, create an object URL from the binary data we get back from the recording, and play it back to the user. We have ourselves a video recorder!

function videoDataHandler (event) {

var blob = event.data;

document.getElementById(‘blob-video’).setAttribute(‘src’, window.URL.createObjectURL(blob));

};

Upload Your Video to a Server

Once we have the video recorder working, we immediately run into the issue that Firefox becomes unstable while recording videos longer than a few minutes (understandably, most programs not designed to do so tend to run into a few issues when handling hundreds of megs of data). Just as importantly, video files this large take at least a few minutes to upload to the server, which isn’t acceptable because we want the users to see their videos right away.

Since most of us do not have the good fortune of attending video conferences less than a few minutes long, we need to find a way to split up and save the video data. Luckily, the MediaRecorder’s start method takes an optional timeslice parameter that controls how often video data is generated (and the ondataavailable method called).

But what is the best way to send these video blobs over to our server? We can try and do ajax requests, but that will could run into concurrency issues, and what format should these blobs of binary data be? We have a few ways to manipulate the blob data with FileReader (convert it to binary string, base 64 string encoding, to just name a few), but sending binary strings over ajax is inefficient. And with base 64 video data strings, we’ll have corrupt ending characters, rendering the videos not playable. After much trial and error, it turns out the best way to upload the video is to use WebSocket’s binary data capabilities and send the video data as ArrayBuffer.

Simply create a simple Node Express server with WebSocket capabilities and set the client’s connection binaryType to ‘arrayBuffer’:

var websocketEndpoint = ‘ws://localhost:7000’;

connection = new WebSocket(websocketEndpoint);

connection.binaryType = ‘arraybuffer’;

Window.FileReader

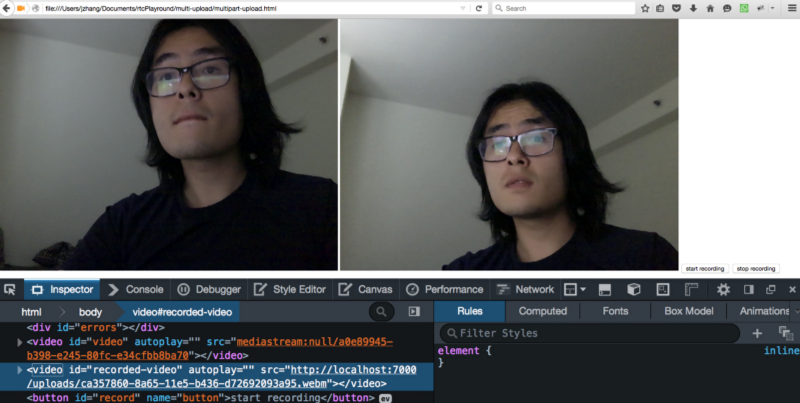

Then when we get the blobs of video data, convert them into array buffers via FileReaders and send them over the socket. On the other side of the connection, our Node server receives these buffers, stores them as .webm files on the server, and serves them back as static content.

reader.readAsArrayBuffer(event.data);

reader.onloadend = function (event) {

connection.send(reader.result);

};

Record a Video Conference

“Neat, but I thought this is a video conference tutorial?” I hear you thinking. Fear not eager video conference fans, now we use some webRTC magic to connect participants (even though they will look more or less like me still).

Window.mozRTCPeerConnection

The creation of an RTC connection consists of three separate phases: the creation of the peer connection object and the attachment of media streams, the session description offer/answer handshake, and lastly the ICE candidate exchange and the resulting remote media stream exchange.

Creating of PeerConnection

To create a video conference, we’ll first grab the user media stream as before, then create a mozRTCPeerConnection object with STUN/TURN server parameters and attach our local stream to the peer connection.

var rtcConfig = { iceServers: [

{ urls: [‘stun:stun.l.google.com:19302’] },

]};

pc = new window.mozRTCPeerConnection(rtcConfig);

pc.addStream(stream);

RTC Handshake

The next step of the RTC connection consists of the creation of an offer session description from one peer connection and the corresponding creation of an answer session description from the other browser. Note that these two session descriptions must be sent via a third party channel, which in our case will be the Node socket server that we created in the earlier part of this post.

var rtcConstraints = {

‘OfferToReceiveAudio’:true,

‘OfferToReceiveVideo’:true

};

pc.createOffer(

function (sessionDescription) {

pc.setLocalDescription(sessionDescription, function () {

var message = { sessionDescription: sessionDescription, type: ‘offer’ }

connection.send(JSON.stringify(message));

offerCreated = true;

},

function (error) {

console.log(‘cannot set local description’);

})

},

function (error) {

console.log(error);

console.log(‘cannot create offer’);

},

rtcConstraints

);

Sharing ICE Candidates

The last thing we need to do to create an RTC connection is to set the onicecandidate handler, which takes care of the exchange of the ICE candidate information between two parties, the completion of which triggers the onaddstream event, carrying the media stream from the other party.

pc.onicecandidate = handleIceCandidate;

function handleIceCandidate(event) {

if (event.candidate) {

connection.send(JSON.stringify({

type: ‘candidate’,

label: event.candidate.sdpMLineIndex,

id: event.candidate.sdpMid,

candidate: event.candidate.candidate

}));

selfCandidates.push(event.candidate.candidate);

} else {

console.log(‘end of candidates’);

}

};

pc.onaddstream = handleRemoteStreamAdded;

function handleRemoteStreamAdded(event) {

document.getElementById(‘video-two’).setAttribute(‘src’,window.URL.createObjectURL(event.stream));

setConferenceID(CONFERENCE_ID);

};

Now, just like before we’ll have each party send chunks of video data to our Node server to be saved on disk. We will use the excellent FF library to first strip out the audio portion of the videos and re-encode them to include timestamps, then concatenate them side by side to create one video with both parties. With that, we’ve got ourselves a video conference recording site!